Can Language Models Resolve GitHub Issues?

Can Language Models Resolve Real-World GitHub Issues? 🤔

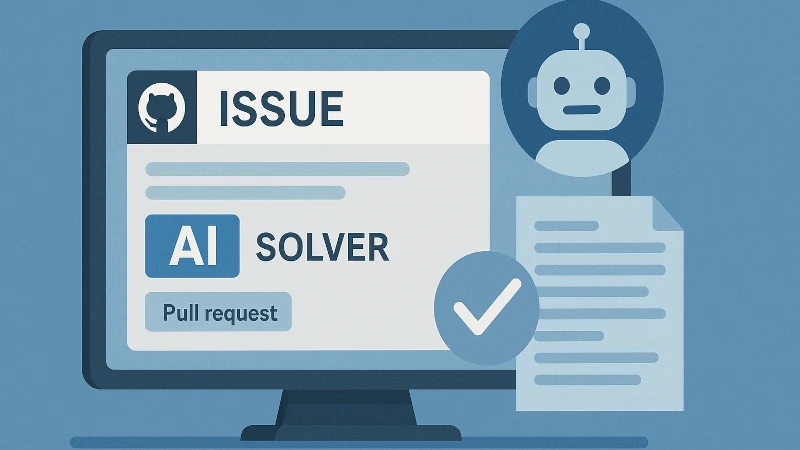

I recently discovered SWE-bench, a fascinating benchmark that evaluates AI models on their ability to resolve real GitHub issues. Developed by researchers from Princeton University and the University of Chicago, SWE-bench provides a comprehensive testbed for assessing AI capabilities in software engineering.

What is SWE-bench?

SWE-bench consists of 2,294 software engineering problems drawn from actual GitHub issues and corresponding pull requests across 12 popular Python repositories. Each task requires a language model to edit the codebase to address a given issue, often necessitating changes across multiple functions, classes, and files. (arxiv.org)

Performance of AI Models

At the time of its introduction, state-of-the-art models like Claude 2 could solve only about 1.96% of the issues. However, advancements have been made. For instance, the Claude 3.7 Sonnet model has achieved a 33.83% success rate on the full SWE-bench benchmark. (dev.to)

OpenAI has also contributed by collaborating with the SWE-bench authors to release SWE-bench Verified, a subset of 500 samples vetted for quality and clarity. This refinement addresses challenges like overly specific unit tests and underspecified issue descriptions.

Why It Matters

SWE-bench offers a realistic and challenging environment to test the practical coding abilities of AI models. Unlike traditional benchmarks focusing on isolated functions, SWE-bench tasks require comprehensive understanding and modifications across entire codebases.

For AI enthusiasts and developers, SWE-bench is a valuable resource to explore the current capabilities and limitations of AI in software engineering.

Hashtags: #AI #SoftwareEngineering #MachineLearning #GitHub #Benchmarking